At some point in 2010 I started noticing different fast food restaurants in California, both in the SF Bay Area and Los Angeles County offering to accept payment via EBT (Electronic Benefits Transfer for food stamps programs). This is a change from the prior rules wherein EBT could only be used for unprepared food e.g. from the grocery store. Now under different programs such as Los Angeles County's Restaurant Meals Program, it is now possible to use food stamps to get fast food, e.g. pizza from Pizza Hut. Before I get into the possible positive and negative effects of this change in policy I want to explain the effects of this change on the market equilibrium for fast food.

In short, this change in policy creates a kink in the demand curve for fast food, as low income EBT recipient customers increase their demand, while demand by higher income customers does not change.

Consider two fast food buying customers. Person A earns an income that disqualifies him from EBT. Person B earns a low enough income to qualify for EBT, and has chosen to receive those benefits.

In short, this change in policy creates a kink in the demand curve for fast food, as low income EBT recipient customers increase their demand, while demand by higher income customers does not change.

Consider two fast food buying customers. Person A earns an income that disqualifies him from EBT. Person B earns a low enough income to qualify for EBT, and has chosen to receive those benefits.

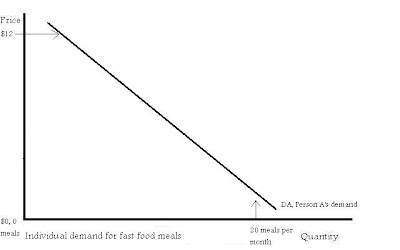

Here is person A's demand curve:

You can see that if fast food was practically free, Person A would consume 20 meals per month. Also notice how, because he has the budgetary capacity, at a price of $12 per fast food meal, Person A would still buy a small quantity of fast food per month at this high price.

Now consider Person B's more modestly budgeted demand curve. This is person B's demand for fast food meals before the change in policy that allows him to purchase fast food with EBT.

Now consider Person B's more modestly budgeted demand curve. This is person B's demand for fast food meals before the change in policy that allows him to purchase fast food with EBT.

Notice how at a price of $12 per meal Person B will not buy any fast food meals. This is beyond his budget at this point. So what happens to Person B's demand curve when suddenly he can use EBT to purchase these meals? This change in policy will shift his demand curve for fast food to the right, because he will now be more able to purchase fast food meals at various prices. Here's his new demand curve:

We have now seen the effects of this change in policy on two individuals, one on food stamps and one not. But what about the market demand curve? And what does this mean for market equilibrium (the point where the quantity demanded equals the quantity supplied)? Let's find out. Because the market demand curve is the summation of all the individual demand curves, just imagine adding together all the demand curves for all the Person A-s and Person B-s of the market. Let's say the demand and supply curves before the policy change look like this:

The market price is at P1 and the quantity sold is at Q1. Suddenly the county government for this market allows EBT to be used to buy fast food. What would this do to the demand curve? Because higher income, non-food-stamp recipient customers like person A can affect the entire market demand curve, from the highest prices to the lowest, and lower income food-stamp recipient customers like person B would tend to only affect the lower parts of the demand curve, when EBT is suddenly allowed for fast food purchases, it is only the lower parts of the demand curve that will shift outward (in reality the change would probably not be this prominent but I have made it prominent just for demonstration). Here is the new market demand curve:

The market price is at P1 and the quantity sold is at Q1. Suddenly the county government for this market allows EBT to be used to buy fast food. What would this do to the demand curve? Because higher income, non-food-stamp recipient customers like person A can affect the entire market demand curve, from the highest prices to the lowest, and lower income food-stamp recipient customers like person B would tend to only affect the lower parts of the demand curve, when EBT is suddenly allowed for fast food purchases, it is only the lower parts of the demand curve that will shift outward (in reality the change would probably not be this prominent but I have made it prominent just for demonstration). Here is the new market demand curve: The result is a kink in the demand curve pushing out at the point where most food stamp recipients would be priced out of the market. Assuming the supply curve is below that point, this increases the equilibrium price to P2 and the equilibrium quantity to Q2. Thus this policy is a good thing for fast food companies, increasing their revenue by the amount of:

The result is a kink in the demand curve pushing out at the point where most food stamp recipients would be priced out of the market. Assuming the supply curve is below that point, this increases the equilibrium price to P2 and the equilibrium quantity to Q2. Thus this policy is a good thing for fast food companies, increasing their revenue by the amount of:(P2*Q2)-(P1*Q1)

So what is the significance of the kink in the demand curve? Probably nothing in today's market, because fast food prices usually stay so much in the lower level that the portions of the demand curve higher than the kink will not come into play. In effect the shift is probably more like a shift of the entire demand curve, because the upper reaches may not even matter for equilibrium. However, if there were suddenly massive supply shocks in the inputs for the supply of fast food, e.g. global potato crop failure, the price might go high enough that more and more Person B-s actually get priced out. Let's hope that doesn't happen anytime soon. Nonetheless it's interesting and worth noting that (at least according to my reasoning) the whole demand curve does not shift, just the lower portion.

Now that I've looked at my graphs, what do I think of this policy?

I'm conflicted on this issue. Fast food is usually bad for you (except for the notable example of Subway). Nonetheless, a food stamp recipient can find food that is just as bad at the local grocery store. There is nothing in the law to prevent a food stamp recipient from using EBT to eat nothing but sticks of butter and Captain Crunch cereal. Also, for homeless food stamp recipients, lacking kitchen tools, buying prepared food can be the easiest way to get a hot meal. The issue here is how paternalistic does one want their government's social welfare programs to be? There is no demand and supply diagram that can easily find the best answer to this question. From my political preference, I am more on the side of allowing EBT purchases of prepared food. Not all of the poor will give themselves heart disease because of this policy change. Some will. But really this is a seperate issue. More nutritional education, (Public Service Announcements etc.) is the key to getting people to demand less junk food in the first place. Thus the "invisible hand" would force fast food companies to offer better choices. Ever since the movie "Super Size Me", I think we've already seen this happening.

I honestly don't know what the best policy is in this case. All I can say is, from just looking at the demand and supply curves, this is a great policy for fast food companies' profit margins. It is also bad for the profit margins of grocery stores who no longer have a monopoly on EBT food transactions.